AI is penetrating into many aspects of human life so that both research in AI as well as in interdisciplinary areas with AI are much needed. We need to create enough critical mass in AI with an environment that provides easier path to learn and to conduct research, and the establishment of the distributed AI laboratory is a start of creating such an environment in 2020.

As more people in the Institute are conducting research with AI, more related research projects will be realized. While the CIS staff are applying for other AI related research projects, collaboration with other schools in other new projects have also started.

The objectives are:

Please also check the Vision and Mission of the School of CIS.

Dr. Ricky Kwok Cheong AU, School of Social Sciences

Dr. Patrick Chi Wai LEE, School of Humanities and Languages

Dr. Charles Kin Man CHOW, Scholl of Business and Hospitality Management

The AI facility consists of:

Research with in-house AI facility is therefore supported both with high power AI servers or the average daily tasks.

Some interfaces are also being established to support interdisciplinary research, i.e., research traditionally in non-computer science may now involve AI.

Some IOT with network cards are being established with distributed AI in network research.

Please contact the School of Computing and Information Sciences or visit the School's office

| 29 Nov 2019 | Enhancing the Way to Secure Research Fund from UGC | Prof. Wan-Chi SIU |

| 20 February 2020 | Neural Network & BP Algorithm | Dr. Yingchao ZHAO |

| 12 March 2020 | Non-linear Function and Filter Structure in CNN | Prof. Wan-Chi SIU |

| 2 April 2020 | Distributed and Federated Learning | Prof. H. Anthony CHAN |

| 16 April 2020 | AlexNet – A Standard Structure of CNN | Prof. Wan-Chi SIU |

| 4 May 2020 | End-to-end CNN and Image Segmentation | Dr. Xueting LIU |

| 21 May 2020 | ResNet – A Breakthrough in Deep Learning | Prof. Wan-Chi SIU |

| 19 June 2020 | Reinforcement learning basis and application case in networking: Augmenting Drive-thru Internet via Reinforcement Learning based Rate | Dr. Wenchao XU |

| 2 July 2020 | Interdisciplinary research to prepare for the Age of Artificial Intelligence | Prof. H. Anthony CHAN |

| 6 July 2020 | GAN – Generative Adversarial Network | Prof. Wan-Chi SIU |

| 20 July 2020 | GAN (Part II) – Training and More Applications | Prof. Wan-Chi SIU |

| 11 August 2020 | Introduction to AI in Natural Language Processing | Prof. H. Anthony CHAN |

| 25 August 2020 | Lab Highlight and Region-based Convolutional Neural Network | Prof. Wan-Chi SIU |

| 8 September 2020 | More RCNN and Additions to Lab Highlight | Prof. Wan-Chi SIU |

| 19 Nov 2020 | Reinforcement learning demo: Q learning and deep Q learning | Dr. Wenchao XU |

| 8 December 2020 | Baseline Model Design with Joint Back Projection and Residual Network | Prof. Wan-Chi SIU |

| 15 December 2020 | Baseline Model Design with Joint Back Projection and Residual Network (Part II) | Prof. Wan-Chi SIU |

| 7 January 2021 | Data and Industry Trend | Prof. H. Anthony CHAN |

| 8 February 2021 |

Part 1: Enhancing the Ways to Secure Research Fund from UGC Part 2: Convolutions |

Prof. Wan-Chi SIU |

| 22 April 2021 | Learning Adaptive Graphs from Data Analysis | Hui LIU |

| 29 April 2021 | Deep Learning with YOLO: An Update of its Recent Development | Dr. Zhi-Song LIU |

| 12 May 2021 | Advancement of NLP with Deep Learning | Prof. Francis CHIN |

| 20 May 2021 | Non-Local Means and Attention Network in Deep Learning | Prof. Wan-Chi SIU |

| 3 June 2021 | Attention and Transformer Network in Deep Learning | Prof. Wan-Chi SIU |

| 24 June 2021 | Attention for Image Super-Resolution | Dr. Zhi-Song LIU |

| 29 July 2021 | Guiding Behavior using AI-Enabled Communication with Virtual Bosom Friend | Kinson CHEUNG |

| 16 August 2021 | Transformers for Computer Vision Applications | Dr. Chengze LI |

| 10 September 2021 | Face recognition: Face Recognition via Convolution Neural Networks | Dr. Zhi-Song LIU |

| 23 September 2021 | Face recognition: A review of the classical learning approach | Prof. Wan-Chi SIU |

| 25 November 2021 | A Conventional Moving Object Detection Approach on Video/Traffic Scene | Eric W.H. CHENG |

| 9 December 2021 | Learning to See in the Dark | Allan C.Y. Chan |

| 23 December 2021 | Further explanation of object detection models with deep learning | Chun Chuen HUI |

| 19 January 2022 | AI, Interdisciplinarity, and Transformation of the Society | Prof. H. Anthony CHAN |

| 11 May 2022 | Conditional Image Synthesis from Texts | Dr. Chengze LI |

| 11 October 2022 | Blockchain-based federated learning with smart contract | Alisdair CO LEE |

| 8 November 2022 | StyleGAN and its applications | Xi CHENG |

| 13 December 2022 | High quality image synthesis with diffusion model | Ziming HUANG |

| 7 February 2023 | Super-resolution using GAN model | Wing Ho Eric CHENG |

| 16 February 2023 | A review on "Repaint Model" for image inpainting | Chun Chuen Ryan HUI |

| 2 March 2023 | WiFi Fingerprinting indoor positioning using deep learning model | Xin Cindy CHEN |

| 7 March 2023 | Brief review on technologies for making a face picture to talk | Ridwan SALAHUDEEN |

| 3 May 2023 | What semiconductor technology is and the way China faces the challenge | Prof. Wan-Chi SIU |

| 29 September 2023 | A re-visit on the basis of the diffusion model | Chun Chuen Ryan HUI |

| 3 October 2023 | Low light image enhancement and back projection technique | Allan Cheuk Yiu CHAN |

A tutorial on Deep Learning is initiated and is planned to be organized in each of the 3 years with this IDS fund. It is organizing jointly with IEEE a 2-day tutorial and is public to enable participants to learn or to deepen understanding of deep learning. Optional laboratory sessions to provide hands-on practice are also provided.

The tutorial in the year 2020 is postponed owing to pandemic to 5-6 March 2021. The announcement is being publicized at 2-Days Tutorial on Deep Learning and is also announced through other professional organizations.

A workshop on Deep Learning is initiated and is planned to be organized in each of the 3 years with this IDS fund. It is organized jointly with IEEE a 2-day workshop on continuous education.

The workshop in the year 2020 is postponed owing to pandemic to 19-20 March 2021. The announcement is being publicized at 2-Days Workshop on Deep Learning and is also announced through other professional organizations.

Huisi Wu, Yifan Li, Xueting Liu, Chengze Li, Wenliang Wu, "Deep texture cartoonization via unsupervised appearance regularization," Computers and Graphics 97 (2021) 99-107.

Prof. H. Anthony CHAN, BSc (HKU), MPhil (CUHK), PhD (Maryland), FIEEE

Professor and Dean, School of Computing and Information Sciences

5G Wireless network, Distributed machine learning, IETF (IPv6 Internet) standards, Components/Systems and Products Reliability

Room A804-1; Tel: 3702 4210; hhchan at sfu.edu.hk

SFU is awarded Institutional Development Scheme (IDS) Research Infrastructure Grant:

Establishment of Distributed Artificial Intelligence Laboratory

for Interdisciplinary Research at $2.8 Million for 1/1/2020 - 31/12/2022.

Team leader: Prof. CHAN Hing Hung Anthony

Team members:

Prof. SIU Wan Chi

Dr. LIU Xueting Tina

et al.

The AI facility consists of

Research with in-house AI facility is therefore supported both with high power AI servers or the average daily tasks.

Some interfaces are also being established to support interdisciplinary research, i.e., research traditionally in non-computer science may now involve AI.

Some IOT with network cards are being established with distributed AI in network research.

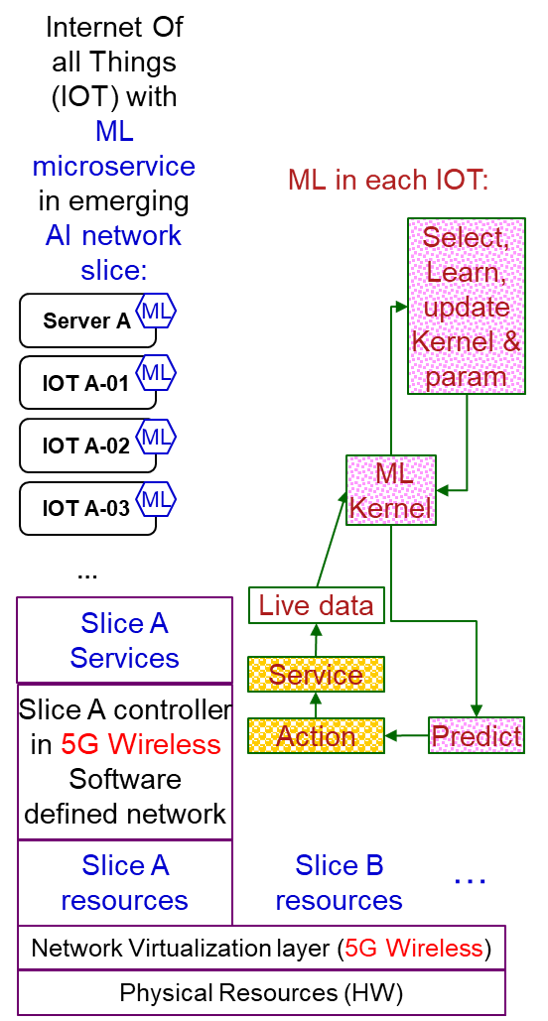

The current/emerging 5G Wireless is not just providing much higher data rate and expected to have 100 billion Internet Of all Things (IOT) IP devices using 5G Wireless network by year 2025. A few important technologies are:

3GPP is also defining the architecture, functions, and interfaces to enable use of machine learning in 5/6G Wireless. Network data analytics function (NWDAF) can employ machine learning to unlock the value of network data in the 5G Wireless core network, whereas machine learning may also be employed in the radio access network (RAN).

In addition, the IOT devices provide a large amount of data to support learning whereas learning may also be distributed or centralized.

Prof. Wan-Chi SIU, MPhil (CUHK), PhD (Imperial College London), IEEE Live-Fellow

Research Professor, School of Computing and Information Sciences

DSP and Fast Algorithms, Intelligent Image and Video Coding, HDTV and 3DTV, Super-Resolution with Machine Learning and Deep Learning, Visual Surveillance, Object Recognition and Tracking, Visual Technology for Vehicle Safety and Autonomous Car

Room A804; wcsiu at sfu.edu.hk

Prof. SIU is awarded Institutional Development Scheme (IDS) Collaborative Research Grant:

Creating an Automatic Football Commentary System with Image Recognition and Cantonese Voice Output

at $7 Million for 1/1/2021 - 31/12/2023.

Project Coordinator (PC): Prof. SIU Wan Chi

Co-PI: Prof. CHAN Sin-wai

Co-I: Prof. CHAN Hing Hung

Dr. Xueting Tina LIU, BEng, BA (Tsinghua), PhD (CUHK)

Assistant Professor, School of Computing and Information Sciences

Computer Graphics, Computer Vision, Machine Learning, Computational Manga and Anime

Room A806; Tel: 3702 4217; tliu at sfu.edu.hk

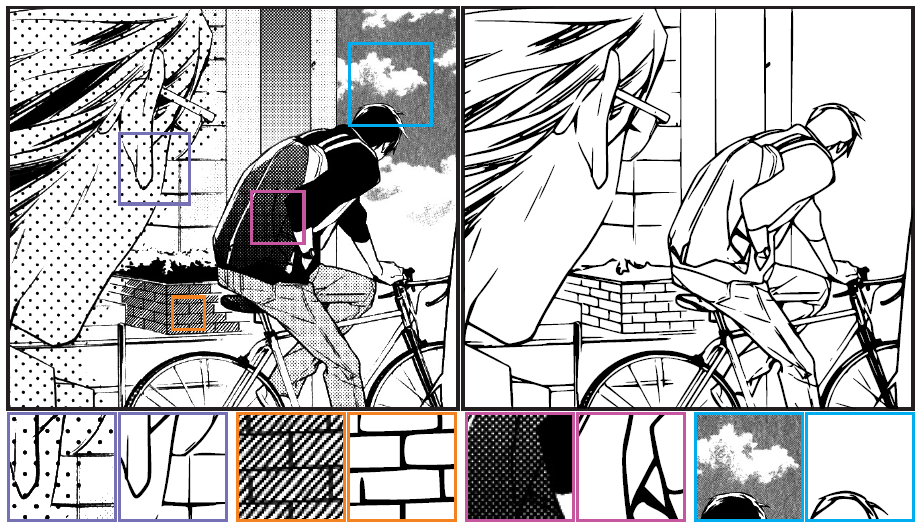

Given a pattern-rich manga, we proposed a novel method to identify the structural lines of the manga image, with no assumption on the patterns. To suit our purpose, we propose a deep network model to handle the large variety of screen patterns and raise output accuracy. We also develop an efficient and effective way to generate a rich set of training data pairs.

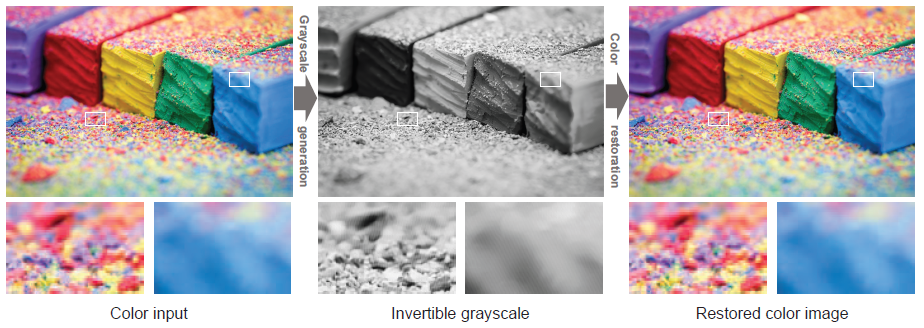

In this work, we propose an innovative method to synthesize invertible grayscale. It is a grayscale image that can fully restore its original color. The key idea here is to encode the original color information into the synthesized grayscale, in a way that users cannot recognize any anomalies. We propose to learn and embed the color-encoding scheme via a convolutional neural network (CNN). We then design a loss function to ensure the trained network possesses three required properties: color invertibility, grayscale conformity, and resistance to quantization error.

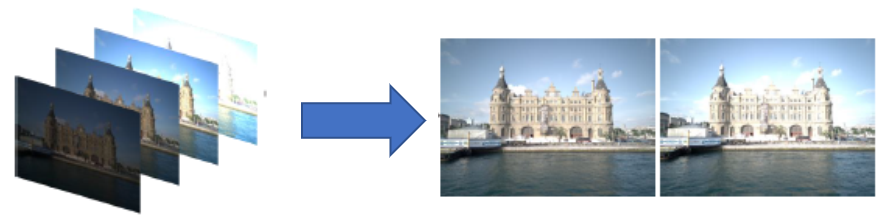

Tone mapping is a commonly used technique that maps the set of colors in high-dynamic-range (HDR) images to another set of colors in low-dynamic-range (LDR) images. Recently, with the increased use of stereoscopic devices, the notion of binocular tone mapping has been proposed. The key is to map an HDR image to two LDR images with different tone mapping parameters, one as left image and the other as right image, so that more human-perceivable visual content can be presented with the binocular LDR image pair than any single LDR image. In this work, we proposed the first binocular tone mapping operator to more effectively distribute visual content to an LDR pair, leveraging the great representability and interpretability of deep convolutional neural network. Based on the existing binocular perception models, novel loss functions are also proposed to optimize the output pairs in terms of local details, global contrast, content distribution, and binocular fusibility.

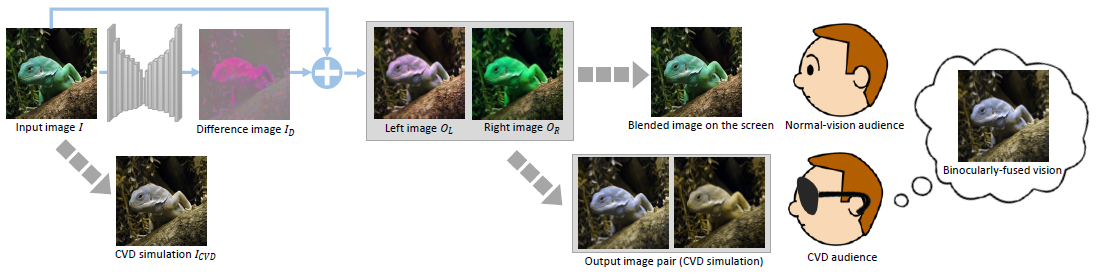

Visual sharing between color vision deficiency (CVD) and normal-vision audiences is challenging due to the need of simultaneous satisfaction of multiple binocular visual requirements, in order to offer a color-distinguishable and binocularly fusible visual experience to CVD audiences, without hurting the visual experience of the normal-vision audiences. In this paper, we propose the first deep-learning based solution for solving this visual sharing problem. To achieve this, we propose to formulate this binocular image generation problem as a generation problem of a difference image, which can effectively enforce the binocular constraints. We also propose to retain only high-quality training data and enrich the variety of training data via intentionally synthesizing various confusing color combinations.

To share the same visual content between color vision deficiencies (CVD) and normal-vision people, attempts have been made to allocate the two visual experiences of binocular display (wearing and not wearing glasses) to CVD and normal-vision audiences. However, existing approaches only tailor for still images. In this paper, we propose the first practical solution for fast synthesis of temporal-coherent colorblind-sharable video. Our fast solution is accomplished by a convolutional neural network (CNN) approach. To avoid the color inconsistency, we propose to use a global color transformation formulation. It first decomposes the color channels of each input video frame into several basis images and then linearly recombines them with the corresponding coefficients. Besides the color consistency, our generated colorblind-sharable video also needs to satisfy four constraints, including the color distinguishability, the binocular fusibility, the color preservation, and temporal coherence. Instead of generating the left and right videos separately, we train our network to predict temporal-coherent coefficients for generating a single difference video (between left and right views), which in turn to generate the binocular pair.

Dr. Chun Pong Jacky CHAN, BSc, PhD (CityUHK)

Assistant Professor

Computer Graphics, Computer Vision, Machine Learning, Character Animation, Health Care Technology

Room A810; Tel: 3702 4204; j2chan at sfu.edu.hk

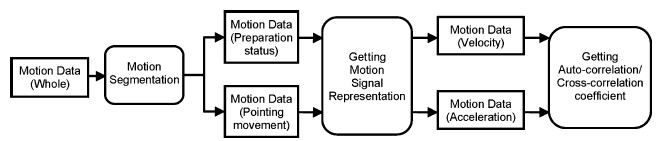

An objective assessment for determining whether a person has Parkinson disease is proposed. This is achieved by analyzing the correlation between joint movements, since Parkinsonian patients often have trouble coordinating different joints in a movement. It provides classification of subjects as having or not having Parkinson’s disease using the least square support vector machine (LS-SVM). Experimental results showed that using either auto-correlation or cross-correlation features for classification provided over 91% correct classification.

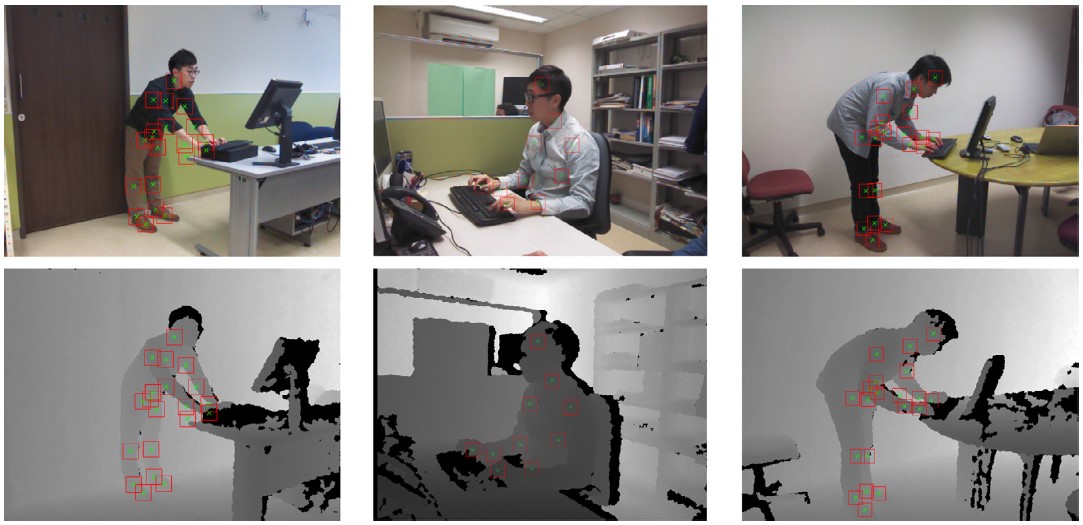

Smart environments and monitoring systems are popular research areas nowadays due to its potential to enhance the quality of life. Applications such as human behavior analysis and workspace ergonomics monitoring are automated, thereby improving well-being of individuals with minimal running cost. In this paper, we propose a framework that accurately classifies the nature of the 3D postures obtained by Kinect using a max-margin classifier. Different from previous work in the area, we integrate the information about the reliability of the tracked joints in order to enhance the accuracy and robustness of our framework. As a result, apart from general classifying activity of different movement context, our proposed method can classify the subtle differences between correctly performed and incorrectly performed movement in the same context. We demonstrate how our framework can be applied to evaluate the user’s posture and identify the postures that may result in musculoskeletal disorders. Such a system can be used in workplace such as offices and factories to reduce risk of injury. Due to the low cost and the easy deployment process of depth camera based motion sensors, our framework can be applied widely in home and office to facilitate smart environments.

Emotion is considered to be a core element in performances. In computer animation, both body motions and facial expressions are two popular mediums for a character to express the emotion. However, there has been limited research in studying how to effectively synthesize these two types of character movements using different levels of emotion strength with intuitive control, which is difficult to be modeled effectively. In this work, we explore a common model that can be used to represent the emotion for the applications of body motions and facial expressions synthesis. Unlike previous work that encode emotions into discrete motion style descriptors, we propose a continuous control indicator called emotion strength by controlling which a data-driven approach is presented to synthesize motions with fine control over emotions. Our method can be applied to interactive applications such as computer games, image editing tools, and virtual reality applications, as well as offline applications such as animation and movie production.

Dr. Ricky Kwok Cheong AU, BSocSc MPhil (HKU), PhD (Univ of Tokyo)

Assistant Professor, School of Social Sciences

Room A626; Tel: 3702 4528; rau at sfu.edu.hk

The School of Social Sciences is investigating emotional expressions with facial recognition aiming to achieve higher accuracy which has been challenging the prior art to recognize emotions in the difficult scenarios.

Dr. Patrick Chi Wai LEE, MBA (Leicester, UK), MA (HKBU), MA (CityUHK), PhD (Newcastle UK)

Assistant Professor, School of Humanities and Languages

Theoretical Linguistics (syntax), Second Language Acquisition, Discourse Analysis

Room A617; Tel: 3702 4303; cwlee at sfu.edu.hk

The School of Humanities and Languages is investigating the use of different responses or types of speech acts between interlocutors (particularly the workplace English for students) to understand the use of various responses or types of speech acts and their functions in a specific scenario.

Dr. Charles Kin Man CHOW, BSc (CityUHK), MBA (HKPolyU), MSc PGCE (HKU), DBA (Newcastle, Australia)

Assistant Professor, Rita Tong Liu School of Business and Hospitality Management

Management information systems, e-commerce, and e-service quality

Room A511; Tel: 3702 4568; cchow at sfu.edu.hk

Current work includes smart hotel, such as smart scenery, smart guest services. Other areas in accounting and finance are also being explored.

In a previous study (Chow, 2017), Dr. Charles Chow examined how web design, responsiveness, reliability, enjoyment, ease of use, security, and customization influence e-service quality of online hotel booking agencies in Hong Kong. It was found that these factors positively influenced e-service quality of online hotel booking agencies. The research provided new insights to industry practitioners with recommendations in designing and implementing online hotel booking websites. With these findings taking into consideration, online hotel booking companies can concentrate their resources to the identified dimensions, in particularly through web design and customization, to achieve the desired level of e-service quality.

Reference

Chow, K. M. (2017). E-service quality: A study of online hotel booking websites in Hong Kong. Asian Journal of Economics, Business and Accounting, 3(4), 1-13.

Introductory Guide to Artificial Intelligence

Artificial Intelligence Programmes

Deep Learning course at Univ Wisconsin-Madison

Introduction to Deep Learning (CUHK)

Deep Learning, by Ian Goodfellow and Yoshua Bengio and Aaron Courville

Is LSTM Dead, Long Live Transformers

How do Transformers Work in NLP?

AI for Everyone(deeplearning.ai)

Deep Learning Specialization(deeplearning.ai)

NeurIPS Online proceedings(neurips.cc)

How Artificial Intelligence Helps in Health Care

Artificial Intelligence Will Change Healthcare as We Know It

AI In Health Care: The Top Ways AI Is Affecting The Health Care Industry

Surgical robots, new medicines and better care: 32 examples of AI in healthcare

6 Nurse AI Robots That Are Changing Healthcare in 2023

The benefits of AI in healthcare(July 2023)

Ethics and governance of AI for health(June 2021) by WHO

Generative AI Will Transform Health Care Sooner Than You Think(June 2023)

HL7:to develop American National Standards Institute ANSI-accredited standards for the exchange, integration, sharing and retrieval of electronic health information that supports clinical practice and the management, delivery and evaluation of health services.

CCD:Continuity of Care Document is built using HL7 Clinical Document Architecture (CDA) elements and contains data that is defined by the American Society for Testing and Materials (ASTM) Continuity of Care Record (CCR) to share summary information about the patient within the broader context of the personal health record.

Jiang F, Jiang Y, Zhi H, et al., "Artificial intelligence in healthcare: past, present and future," Stroke and Vascular Neurology 2017;2:doi: 10.1136/svn-2017-000101

Dhamdhere P, Harmsen J, Hebbar R, et al., "Big Data in Healthcare," 2016.

Joyce C, "Harnessing the Power of Data in Health Care: Data as a Strategic Asset,"Symposium on Data Science for Healthcare (DaSH), 2017.

Ettinger A, "Data and Information Management in Public Health," July 2004.

AI for Medicine Specialization(deeplearning.ai)

The Place of Management in an AI Curriculum

Managing Human and Machine Intelligence

Future of Social Work, GISW Anniversary Celebration, Nov 23 2018.

Future of Social Work: International Perspectives

How Artificial Intelligence Will Save Lives in the 21st Century

Artificial Intelligence Ideas and Social Work

Deep Learning for NPL: An Overview of Recent Trends

AI for Natural Language Processing Specialization(deeplearning.ai)

Why Andrew Ng favours data-centric systems over model-centric systems